Generative Ai

Voice Tonality Technology

Attracting the right audience has been one of the primary requirements for marketers across sectors to grow their businesses. However, standard marketing practices become commonplace as the market grows more competitive. As marketers explore newer techniques to engage their customers or, more importantly, accurately identify purchase intent, voice tonality can be helpful. In this article, we will explore the role of voice tonality in marketing and how it can be leveraged to improve your ROI.

What is 'Tone of Voice'

How a company communicates can impact its impression on its audience. This is because language is understood on two levels – the facts convey the analytical aspect of the message. At the same time, the tone appeals to the brain's creative side and influences how the audience feels about the company.

The tone of voice encompasses all the words used in a business's content, including sales emails, product brochures, call-center scripts, and client presentations. It's not just about writing well or having strong messaging but goes beyond that to give a unique voice to your communications.

Consistency in tone of voice is crucial to creating a reliable and trustworthy brand image. Hearing the same voice across all your communication channels builds their confidence in your company and assures them of a consistent brand experience.

In recent times, B2B companies have started using tone of voice as a means of engaging their customers through language.

Can AI detect the tone of voice?

Failing to monitor what people are saying about your business is not a wise move. Fortunately, Artificial Intelligence technologies can now understand not just words but also the emotional intent behind them.

By using Artificial Intelligence, businesses can gain insights into their perceptions. Tone Analyser is a tool that can identify seven different conversational tones, including frustration, impoliteness, sadness, sympathy, politeness, satisfaction, and excitement.

Tone Analyser can also recognize the emotional tone of common emojis, emoticons, and slang. This tool is designed to understand interactions between customers and brands, enabling it to monitor communications, identify anomalies, and highlight opportunities for improvement. It tracks changes in tone throughout conversations and flags when action needs to be taken.

AI can be an attractive proposition, allowing you to test how your target audience perceives tenders, marketing materials, websites, and presentations. Additionally, you can monitor customer interactions from social media and call centers.

Types of Sentiments

- Funny vs. serious

- Formal vs. casual

- Respectful vs. irreverent

- Enthusiastic vs. matter-of-fact

The tone of voice can be located at different points within the range representing each dimension's extremes and middle ground. Alternatively, it means that each brand has its own unique tone of voice that can be identified within this space.

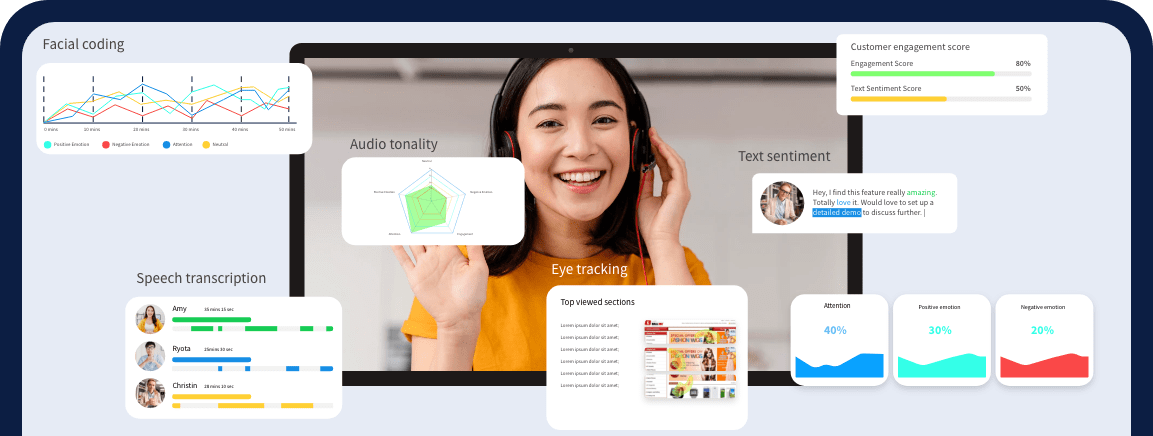

Data Presentation with Voice Tonality

The following are some steps to follow when presenting data for voice tonality using AI-based tools:

- Collect Data:

The first step in analyzing voice tonality using AI-based tools is to collect data from various channels, such as call center recordings, social media conversations, or online video conferences. It's essential to ensure that the data collected is diverse and representative of the target audience, including different genders, ages, and cultural backgrounds. - Clean and Pre-process Data:

The next step is to clean and pre-process the data to ensure accuracy and consistency. It includes removing irrelevant or redundant data, converting audio recordings to relevant formats, and ensuring the data is properly labeled and categorized. - Analyze Data Using AI-Based Tools:

Once the data has been pre-processed, AI-based tools can analyze voice tonality and identify emotional cues such as happiness, sadness, anger, or excitement. Various AI-based tools are available that can accurately identify different emotions conveyed through speech. - Data Visualization:

Once the data has been analyzed, it's time to present it in an actionable format. Various charts, graphs, or other visualizations make understanding user insights easier. For instance, a chart could show the percentage of positive and negative tones in a conversation, or a graph could highlight how the speaker's tonality changes throughout the discussion. - Data Interpretation:

The final step here is interpreting the data. The information presented through AI-based tools identifies different emotional cues conveyed through speech and how they impact the listener's emotional response. This information can improve emotional AI applications, such as chatbots or virtual assistants, by making them more responsive to emotional cues and enhancing their ability to understand and respond to human emotions.

Use Cases of Voice Tonality

Research

Sales enablement

Real-time Insights

Pre-recorded Insights

Challenges with AI-based Voice Tonality

Accuracy

Data privacy and user security

Scalability and cost of deployment

Request a Live Demo!

See how Facial Coding can

help your business

Book your slot now!

Other technologies similar to facial coding

Latest from our Resources

The Importance of AI in Customer Journey Testing in 2025

In today’s highly aggressive marketplace, presenting a seamless and personalized client experience has end up a top priority for companies. The patron adventure—from preliminary consciousness to put up-buy engagement—is increasingly complex, related to

Read MoreHow AI Can Improve Your UX Testing Process

User revel in (UX) is a essential detail in figuring out the success of any digital service or product.. A seamless and intuitive UX can

Read nowUnderstanding Emotion AI and Its Impact on Marketing Strategies

In a generation in which consumer enjoy is fundamental, Emotion AI (also called affective computing) has emerged as a progressive device

Read now