The highly promising proposition of using AI for market research has finally been accepted by market researchers across multiple sectors. The change in mindset was propelled by the undeniable benefits that AI tools offers when it comes to extracting deeper and more actionable insights from data. Certain aspects of AI-based market research have added another dimension to data-driven decision making by verifying the authenticity of responses accumulated through both qualitative and quantitative research avenues.

The results of which have been staggering.

Advanced AI tools like Natural Language Processing and Facial Action Coding System facilitate the micro-analysis of product-user interactions. Earlier, what used to be a discrete feedback form respondents used to fill after the interaction, has now been transformed into a continuous array of audio-visual cues recorded and analysed frame by frame.

Today, our goal is to educate you on how FACS actually works and what is the impact of using it in market research using some real examples and case studies.

But before we dive into that, here’s a brief overview of what FACS stands for.

Decoding FACS: The Craftful AI Wizardry

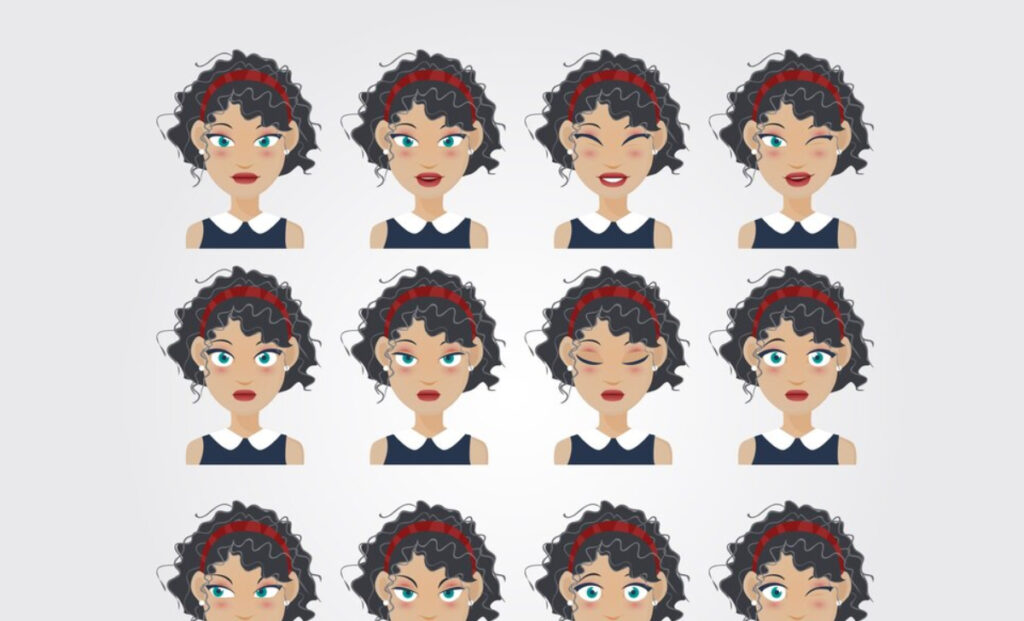

FACS or Facial Action Coding System is a subset of AI that facilitates emotional cognition. It serves a similar function to the ‘Amygdala’ of the brain that acts as a processing centre for emotions. The reason why we say ‘similar’ and not ‘identical’ is because the human brain is capable of recognizing and reproducing 27 distinct emotions while AI models can only recognize and differentiate between 8 emotions.

However, that doesn’t take away the inherent utility of FACS for market researchers, the fact that an AI model can understand emotions is in itself a miracle and once you couple that with the immense processing power of modern day computers, you begin to understand the scope of FACS in market research.

When customer-product interactions are recorded and broken down into discrete frames that are later analysed using FACS, a new dimension of consumer insights surfaces, facilitating better decisions at the stakeholder level.

The way FACS does this is very clever, it groups certain facial muscle movements together and calls them action units. One or more action units are then associated with an emotion like happiness, sadness, or excitement. For example, combining AU6 (Cheeks raiser), AU12 (Lip Corner Puller), and AU25 (Lips Part) generates a ‘Happiness’ expression.

As soon as the systems detect identical action units in a certain frame, the emotions are immediately flagged and recorded. This has use cases across various industries like marketing, animation, entertainment, and content distribution.

We have put together a bunch of real world examples to help substantiate our claim.

Some Real World Examples of FACS in Market Research

-

Comparing the Engagement Value of Warner Bros Trailers Using Insights Pro

Insights Pro is one of our premier products that uses advanced AI tools like Natural Language Processing and FACS to uncover emotional insights from data. In the Warner Bros Trailer Testing Case Study, a meticulous analysis of two trailers was conducted to assess emotional responses and offer inputs to the creative team.

Both trailers were evaluated second by second, and showed high engagement scores, with Trailer 2 slightly outperforming the Official Trailer. Character-wise analysis revealed the significant influence of characters like Kevin Hart and Superman’s Dog on joy scores. Moments of humour, such as Superman’s dog waking him up and Kevin Hart’s character serving as a meat shield, elicited strong joy responses in the Official Trailer. The joy scores were assigned based on tonal and facial analysis.

Similarly, Trailer 2 garnered joy with scenes like the dog playing catch with a Batman toy. Despite positive responses to both trailers, the Official Trailer generated more happy responses overall, indicating its stronger connection with viewers. This case study underscores the importance of character representation and emotional engagement in creating effective trailers that resonate with audiences, ultimately driving ticket sales and cementing Warner Bros’ position as a leading film studio.

-

FACS for Creating Realistic Animations

Creating realistic facial expressions using CGI is a monstrous challenge, as it requires relentless face modelling, animation, and rendering. Key techniques like FACS (Facial Action Coding System) and MPEG-4 Facial Animation are used for creating lifelike fictional characters. FACS, particularly, has revolutionised facial animation, offering a standard approach for encoding expressions. One of it’s key applications include muscle-based animation, where it guides muscle activations, to systems like the Facial Expression Solver, employed in major film productions like “King Kong (2005).”

By mapping FACS poses onto digital puppets, these systems can generate thousands of nuanced facial expressions, facilitating realism in CGI. You can read more about how FACS is used in animation here.

-

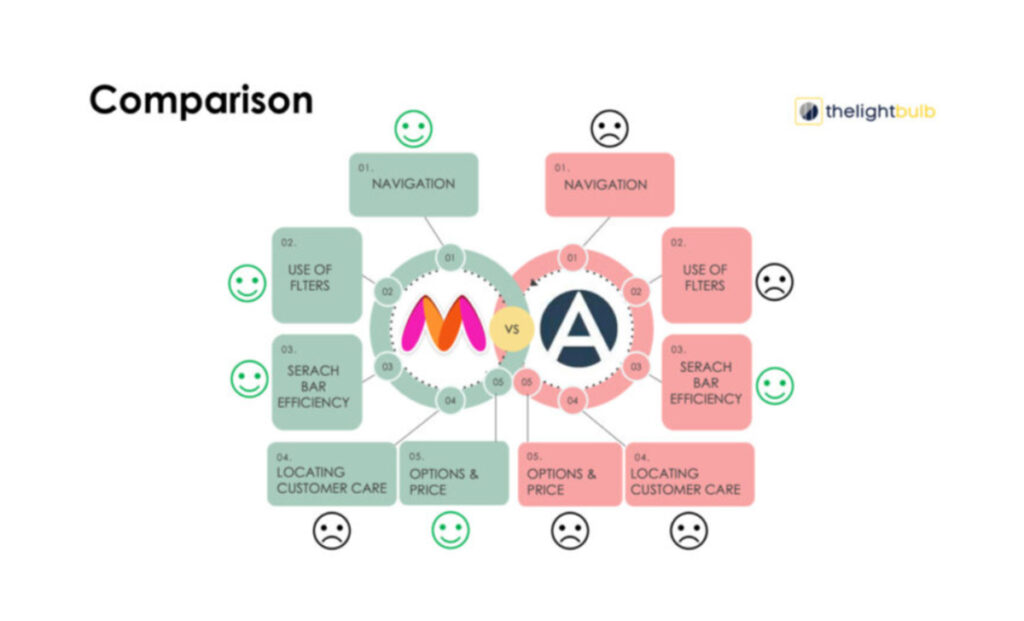

Decoding Online Shopping Experiences with FACS

Lightbulb conducted an interesting case study to understand online shopping experiences by analysing the emotional profile of users while interacting with two popular online shopping platforms AJIO and Myntra. Insights Pro was used with objectives centred on action point analysis and emotional response measurement, the study delved into user journeys, revealing preferences and pain points. Participants, aged 20 to 35, browsed through Ajio and Myntra’s platforms, highlighting search bar efficiency, filter usage, and customer support accessibility. While both platforms offered delights like product variety and discount coupons, Myntra emerged as the preferred choice due to superior filters and search bar efficiency.

For Ajio, participants navigated through a three-step purchase journey, highlighting preferences in search bar usage and filter utilisation. Delight was found in product variety and recently viewed options, while pop-ups posed a pain point.

Similarly, Myntra’s journey mirrored Ajio’s, with users appreciating price sliders and discount coupons while expressing frustration over the help centre’s accessibility.

-

Evaluating Efficacy of Nike’s Video Ads With FACS

The effectiveness of Nike’s video ads was rigorously evaluated using cutting-edge AI methodologies. Using Facial Action Coding System (FACS) in tandem with advanced emotion AI tools like tonal analysis and eye-tracking, the aim was to dissect the emotional impact and attention-grabbing potential of Nike’s campaigns. By analysing facial expressions and eye movements of respondents, coupled with sentiment analysis, the study unearthed pivotal insights into audience engagement and brand perception.

Nike used these insights to refine its advertising strategies and resonate more effectively with its target audience, underscoring the transformative power of AI in modern marketing research. You can watch the full case study here.

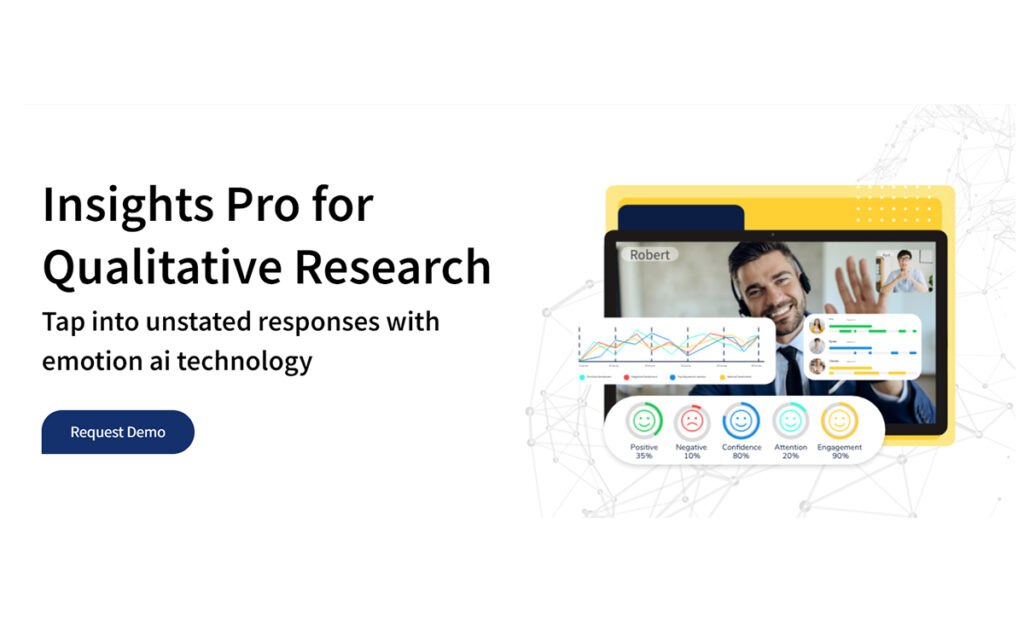

About Insights Pro

Insights Pro is an AI-based ad testing tool that combines emotional intelligence with new-gen AI tools like speech transcription, facial coding, text sentiment analysis, and eye tracking to generate an emotional heatmap of an interaction between a viewer and a creative while also offering AI-based databases and tools for creative optimisation to accelerate the creative testing process.

This tool has taken AI-backed creative testing one step ahead of the competition by integrating emotional intelligence into the AI equation, facilitating quicker decisions backed by real data and insights.